Learning - Prometheus Exporter

Steps to monitor MongoDB metrics

- Deploy MongoDB App

- Deploy MongoDB Exporter

- Deploy ServiceMonitor

Deployment

minikube start --cpus 4 --memory 8192 --vm-driver hyperkit

helm ls

kubectl get pod

kubectl get svc

kubectl port-forward prometheus-kube-prometheus-prometheus 9090

kubectl port-forward prometheus-grafana 80servicemonitor

ServiceMonitor is a custom Kubernetes component

kubectl get servicemonitor

kubectl get servicemonitor prometheus-kube-prometheus-grafana -oyaml

...

metadata:

labels:

release: prometheus

spec:

endpoints:

- path: /metrics

port: service

selector:

matchLabels:

app.kubernetes.io/instance: prometheus

app.kubernetes.io/name: grafanaCRD configuration

$ kubectl get crd

...

prometheuses.monitoring.coreos.com ...

...

$ kubectl get prometheuses.monitoring.coreos.com -oyaml

...

spec:

serviceMonitorSelector:

matchLabels:

release: prometheus

...Deploy MongoDB

mongodb-without-exporter.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: mongodb-deployment

labels:

app: mongodb

spec:

replicas: 1

selector:

matchLabels:

app: mongodb

template:

metadata:

labels:

app: mongodb

spec:

containers:

- name: mongodb

image: mongo

ports:

- containerPort: 27017

---

apiVersion: v1

kind: Service

metadata:

name: mongodb-service

spec:

selector:

app: mongodb

ports:

- protocol: TCP

port: 27017

targetPort: 27017kubectl apply -f mongodb-without-exporter.yaml

kubectl get podDeploy MongoDB Exporter

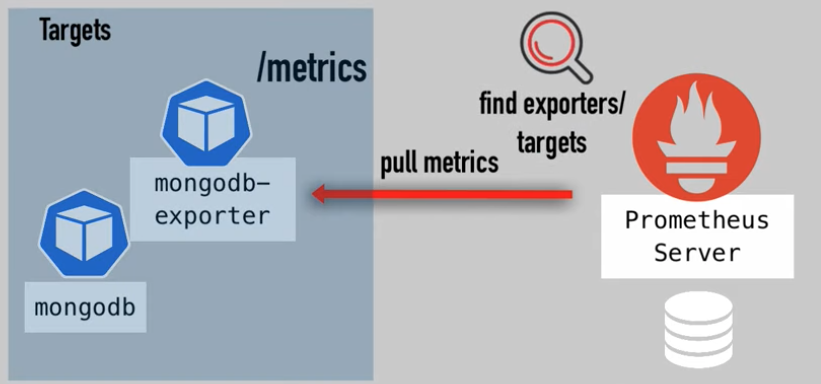

Translator between apps data to Prometheus understandable metrics

Target (MongoDB App) <= fetches metrics <= converts to correct format <= expose /metrics <= Prometheus Server

- Separate deployment - No need to change config files

MongoDB exporter (mongodb-exporter) can be downloaded from exporter site or dockerhub.

Exporter Site

Exporters can be downloaded from https://prometheus.io/docs/instrumenting/exporters

Nodes exporter - translates metrics of cluster Nodes, exposes /metrics

prometheus-prometheus-node-exporter-8qvwnComponents for exporter

- Exporter application - exposes

/metricsendpoint - Service - for connnecting to the exporter

- ServiceMonitor - to be discovered

Helm chart for exporter

Search for mongodb-exporter helm chart

https://github.com/prometheus-community/helm-charts

Override values using chart parameters

helm show values <chart-name>Add Helm repo

helm repo add prometheus-community https://prometheus-community.github.io/helm-charts

helm show values prometheus-community/prometheus-mongodb-exporter > values.yamlOverride values in values.yaml

mongodb:

uri: "mongodb://mongodb-service:27017"

serviceMonitor:

additionalLabels:

release: prometheuswith the label Prometheus automatically discovers a new ServiceMonitor in the cluster

$ helm install mongodb-exporter prometheus-community/prometheus-mongodb-exporter -f values.yaml

...

$ helm ls

mongodb-exporter

...

$ kubectl get pod

...

mongodb-exporter-prometheus-mongodb-exporter-75...

...

$ kubectl get svc

...

mongodb-exporter-prometheus-mongodb-exporter

...

$ kubectl get servicemonitor

...

mongodb-exporter-prometheus-mongodb-exporter

...

$ kubectl get servicemonitor mongodb-exporter-prometheus-mongodb-exporter -o yaml

...

metadata:

labels:

release: prometheus

...Check endpoint /metrics

$ kubectl get svc

...

mongodb-exporter-prometheus-mongodb-exporter

...

kubectl port-forward service/mongodb-exporter-prometheus-mongodb-exporter 9216Access https://127.0.0.1:9216/metrics

The mongodb-exporter is added as targets in prometheus, because the label release: prometheus is set and auto discovered.

MongoDB metrics data in Grafana UI

kubectl get deployment

kubectl port-forward deployment/prometheus-grafana 3000References

Prometheus Monitoring - Steps to monitor third-party apps using Prometheus Exporter | Part 2