Learning - Setup Prometheus Monitoring on Kubernetes

Prometheus Server

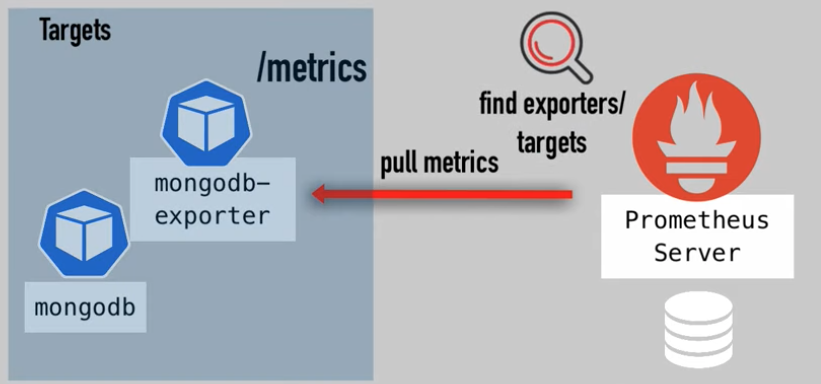

- Data Retrieval Worker - Retrieval - pull metrics data

- Time Series Database - Storage - stores metrics data

- Accepts PromQL queries - HTTP Server - accepts queries

Alertmanager

Prometheus Server => push alerts => Alertmanager => Email, Slack, etc.

Prometheus UI

Deployment

How to deploy the different parts in Kubernetes cluster?

Setup with Helm chart

$ kubectl get pod

$ helm install prometheus stable/prometheus-operator

$ kubectl get pod

NAME ...

alertmanager-prometheus-prometheus-oper-alertmanager-0

prometheus-grafana-67...

prometheus-kube-status-metrics-c6...

prometheus-prometheus-node-explorter-jr...

prometheus-prometheus-oper-operator-78...

prometheus-prometheus-prometheus-oper-prometheus-0...

Prometheus Components

kubectl get all

2 Statfulset

Prometheus Server

statefulset.apps/prometheus-prometheus-prometheus-oper-prometheus

Alertmanager

statefulset.apps/alertmanager-prometheus-prometheus-oper-alertmanager

3 Deployments

Prometheus Operator - created Prometheus and Alertmanager StatefulSet

deployment.apps/prometheus-prometheus-oper-operator

Grafana

deployment.apps/prometheus-grafana

Kube State Metrics

deployment.apps/prometheus-kube-state-metrics

- own Helm chart

- dependency of this Helm chart

- scrapes K8s components - K8s infrastructure monitoring

3 StatefulSets

Created by Deployment

replicaset.apps/prometheus-prometheus-oper-operator...

replicaset.apps/prometheus-grafana...

replicaset.apps/prometheus-kube-state-metrics...

1 DaemonSet

daemonset.apps/prometheus-prometheus-node-exporter

DaemonSet runs on every Worker Node

- connects to Server

- translates Worker Node metrics to Prometheus metrics - CPU usage, load on server

Completed tasks

- Monitoring Stack

- Configuration for your K8s cluster

- Worker Nodes monitored

- K8s components monitored

ConfigMaps

kubectl get configmap

- configurations for different parts

- managed by operator

- how to connect to default metrics

Secrets

kubectl get secret

-

for Grafana

-

for Prometheus

-

for Operator

-

certificates

-

username & passwords

...

CRDs

kubectl get crd

extension of Kubernetes API

- custom resource definitions

Describe components

kubectl describe = container/image information

kubectl get statefulset

kubectl describe statefulset prometheus-prometheus-prometheus-oper-prometheus > prom.yaml

kubectl describe statefulset alertmanager-prometheus-prometheus-oper-alertmanager > alert.yaml

kubectl get deployment

kubectl describe deployment prometheus-prometheus-oper-operator > oper.yaml

Stateful oper-prometheus

Containers:

- prometheus

- Images: quay.io/prometheus/prometheus:v2.18.1

- Port: 9090/TCP

- Mounts: where Prometheus gets its configuration data mounted into Prometheus Pod

/etc/prometheus/certs/etc/prometheus/config_out/etc/prometheus/rules/.../prometheus

They are- Configuration file: what endpoints to scrape

- address of applications: expose

/metrics

- Rules configuration file: alerting rules, etc.

The two sidecar/help container *-reloader, they help reloading, responsible for reloading, when configuration files changes.

ConfigMap and Secret (States):

kubectl get configmap

kubectl get secret

In prom.yaml,

- Args:

--config-file=/etc/promtheus/config

- Mounts:

/etc/prometheus/config from config/etc/prometheus/config_out from config_out

- Volumes:

config, it is a secret

kubectl get secret prometheus-prometheus-prometheus-oper-prometheus -o yaml > secret.yaml

apiVersion: v1

data:

prometheus.yaml.gz: ....

In rules file rules-configmap-reloader

Mounts: /etc/prometheus/rules/prometheus-prometheus-prometheus-oper-prometheus-rulefiles-0 from prometheus-prometheus-prometheus-oper-prometheus-rulefiles-0

Volumes: ConfigMap prometheus-prometheus-prometheus-oper-prometheus-rulefiles-0

kubectl get configmap prometheus-prometheus-prometheus-oper-prometheus-rulefiles-0 -o yaml > config.yaml

apiVersion: v1

data:

default-prometheus-prometheus-oper-alertmanager.rules.yaml

groups:

- name: alertmanager.rules

rules:

- alert: AlertmanagerConfigInconsistent

...

Stateful alertmanager

Containers:

-

alertmanager

- Image: quay.io/prometheus/alertmanager:v0.20.0

config.file: /etc/alertmanager/config/alertmanager.yaml

-

config-reloader

- Image: `docker.io/jimmidyson/configmap-reload:v0.3.0

Operator permetheus-operator

Containers:

Tasks

Access Grafana

$ kubectl get service

...

prometheus-grafana ClusterIP ...

ClusterIP = Internal Services

$ kubectl get deployment

...

prometheus-grafana

...

$ kubectl get pod

...

prometheus-grafana-67....

...

$ kubectl logs prometheus-grafana-67... -c grafana

...

... user=admin

...

... address=[::]:3000 ...

...

port: 300

default user: admin

$ kubectl port-forward deployment/prometheus-grafana 3000

Forwarding from 127.0.0.1:3000 -> 3000

Forwarding from [::1]:3000 -> 3000

Then the grafana can be accessed via https://localhost:3000

The default admin password is "prom-operator", which can be found in chart: https://github.com/heim/charts/tree/master/stable/prometheus-operator#...

$ kubectl get pod

...

prometheus-kube-state-metrics-c6...

prometheus-prometheus-node-exporter-jr...

...

Prometheus UI

$ kubectl get pod

...

prometheus-prometheus-prometheus-oper-prometheus-0

...

$ kubectl port-forward prometheus-prometheus-prometheus-oper-prometheus-0 9090

Forwarding from 127.0.0.1:9090 -> 9090

Forwarding from [::1]:9090 -> 9090

Then Prometheus UI can be accessed via https://localhost:9090/.

Summarize

- Deployed Prometheus stack using Helm

- Overview of what these different components are and do

- Configure additional metrics endpoint

References

Setup Prometheus Monitoring on Kubernetes using Helm and Prometheus Operator | Part 1